Just one of the explicit AI images of Taylor Swift was viewed over 47 million times on X

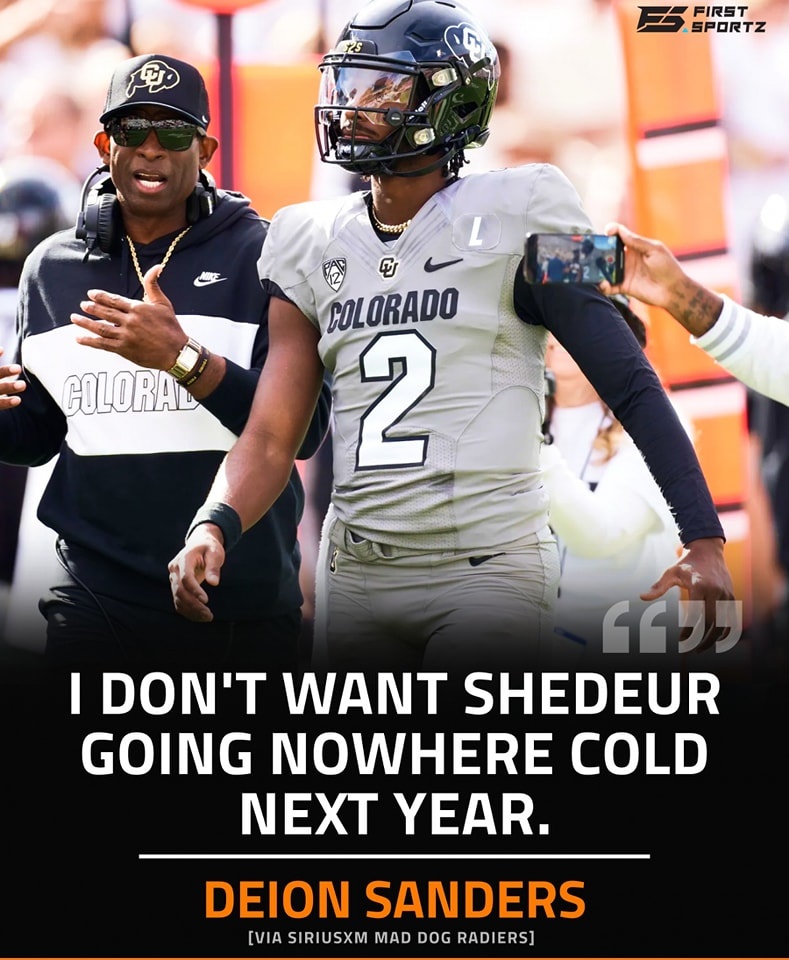

Taylor Swift is not taking any prisoners as she reportedly gets suited up to take legal action over explicit AI images that were distributed across multiple social media platforms.

The 34-year-old was allegedly left “furious” after deepfake images of her were digitally created that showed the singer in a number of compromising poses.

The AI-generated images were shared across social media platforms like Reddit, Facebook, and X – the latter being where the images were first published – before they were taken down by the respective companies.

Speaking to NBC News on Friday, Microsoft CEO Satya Nadella emphasized the business’s desire to act fast as they work on tackling this issue from the root cause.

Taylor Swift is allegedly taking legal action against whoever distributed explicit images of her on social media. Credit: Amy Sussman/Getty“Yes, we have to act,” Nadella said when asked about the images of Swift. “I think we all benefit when the online world is a safe world. And so I don’t think anyone would want an online world that is completely not safe for both content creators and content consumers. So therefore, I think it behooves us to move fast on this.”

Microsoft has invested in and created artificial intelligence technology of its own – including OpenAI and Copilot.

“I go back to what I think’s our responsibility, which is all of the guardrails that we need to place around the technology so that there’s more safe content that’s being produced,” Nadella continued. “And there’s a lot to be done and a lot being done there.

“But it is about global, societal, you know, I’ll say convergence on certain norms,” he added. “Especially when you have law and law enforcement and tech platforms that can come together, I think we can govern a lot more than we give ourselves credit for.”

While it has not been independently verified, at the time, 404 Media reported that the images were traced back to a Telegram group chat, where members said they used Microsoft’s generative-AI tool, Designer, to make the vile content before distributing it to other platforms.

Microsoft is working “fast” to take down the images of Swift on social media. Credit: Gotham/GC Images/GettyThe Sun published a report in which they confirmed that one of the lewd images was viewed over 47 million times, while others were seen approximately 27 million times.

“Our Code of Conduct prohibits the use of our tools for the creation of adult or non-consensual intimate content, and any repeated attempts to produce content that goes against our policies may result in loss of access to the service,” Microsoft said in a statement to 404 Media. “We have large teams working on the development of guardrails and other safety systems in line with our responsible AI principles, including content filtering, operational monitoring and abuse detection to mitigate misuse of the system and help create a safer environment for users.”

One of the lewd images of Swift was viewed over 47 million times before it was taken down. Credit: James Devaney/GC Images/GettyIn an update, Microsoft also stated that they take reports of this nature “very seriously and are committed to providing a safe experience for everyone.”

“We have investigated these reports and have not been able to reproduce the explicit images in these reports. Our content safety filters for explicit content were running and we have found no evidence that they were bypassed so far,” it continued.

“Out of an abundance of caution, we have taken steps to strengthen our text filtering prompts and address the misuse of our services,” the statement added.

After the images went viral, many people spoke out about how Taylor Swift was living every woman’s “worst nightmare”, as they called for change when it came to AI.