Taylor Swift ‘furious’ about AI-generated fake n:u:d:e images, considers legal action: report

Taylor Swift is reportedly “furious” that AI-generated n:u:d:e images of her were circulating across social media on Thursday — and she’s weighing possible legal action against the site responsible for generating the photos, according to a report.

“Whether or not legal action will be taken is being decided but there is one thing that is clear: these fake AI generated images are abusive, offensive, exploitative and done without Taylor’s consent and/or knowledge,” a source close to the 34-year-old pop star told the Daily Mail.

“Taylor’s circle of family and friends are furious, as are her fans obviously. They have the right to be, and every woman should be,” the source added, noting that the X account that originally posted the X-rated content “does not exist anymore.”

A source close to Swift added, per the news site: “The door needs to be shut on this. Legislation needs to be passed to prevent this and laws must be enacted.”

After the pics took the internet by storm Thursday morning, Swifties banned together and tried to bury the images by sharing an influx of positive posts about the “Shake It Off” singer.

A source close to Taylor Swift told the Daily Mail that the pop star is considering legal action after X account @FloridaPigMan used artificial intelligence to generate n:u:d:e images of her.

As of Thursday afternoon, the photos in question — which showed Swift in various s3xualized positions at a Kansas City Chiefs game, a nod to her highly-publicized romance with the team’s tight end, Travis Kelce — appeared to be yanked from the platform.

The crude images were traced back to an account under the handle @FloridaPigMan, which no longer bears any results on X.

The account reportedly garnered the images from Celeb Jihad, which boasts a collection of fake pornographic imagery, or “deepfakes,” using celebrities’ likenesses.

Once posted to X, the images were viewed more than 45 million times, reposted some 24,000 times and liked by hundreds of thousands of people before @FloridaPigMan, a verified account, was suspended for violating the platform’s policy, according to The Verge.

The images were on X for about 17 hours before their removal, The Verge reported.

“It is shocking that the social media platform even let them be up to begin with,” a source close to Swift told the Daily Mail.

X’s Help Center outlines policies on “synthetic and manipulated media” as well as “nonconsensual nudity,” which both prohibit X-rated deepfakes from being posted on the site.

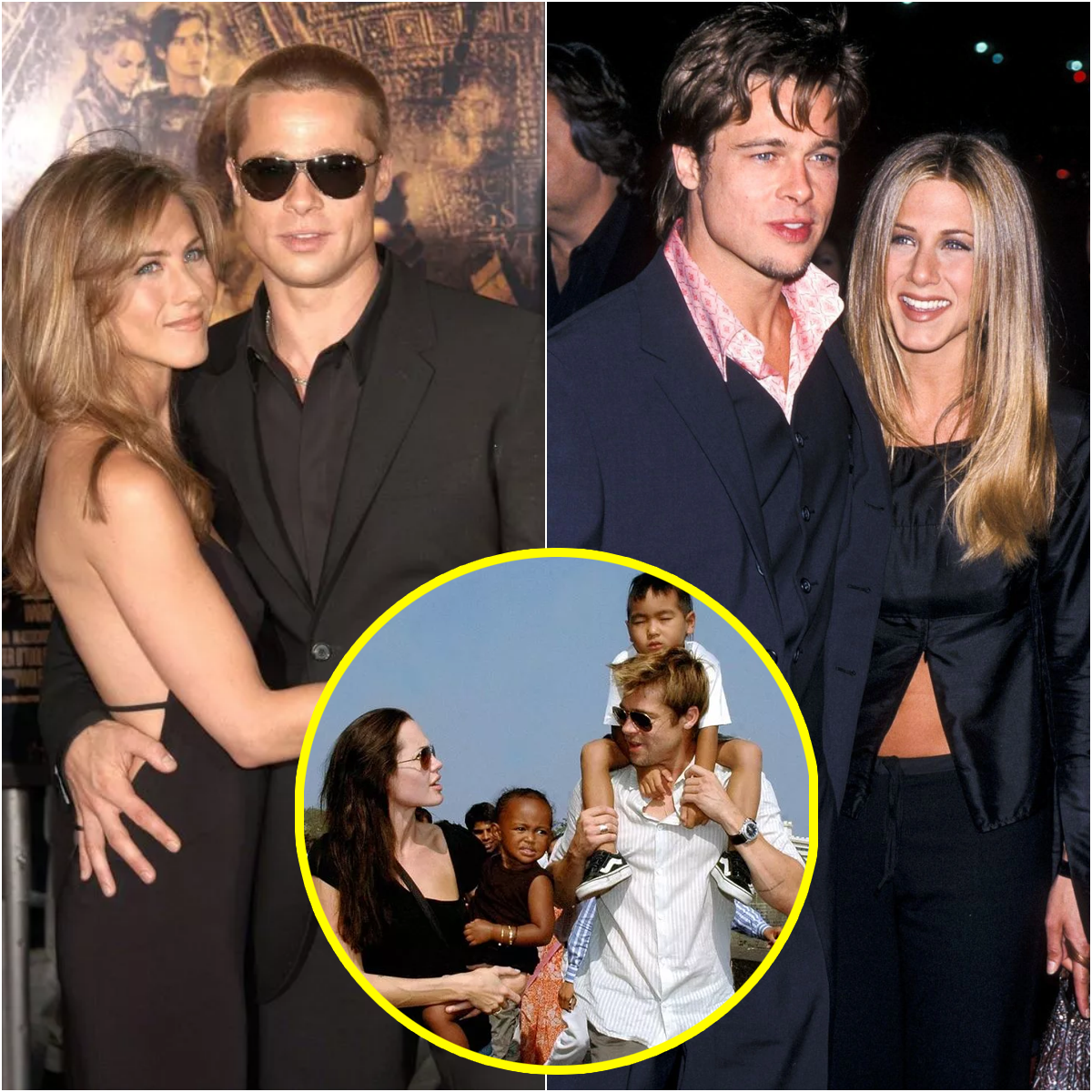

The explicit images of Swift were live for about 17 hours before being yanked from X. In that time, they were viewed more than 45 million times and reposted about 24,000 times.AFP via Getty Images

Representatives for X did not immediately respond to The Post’s request for comment. Swift also has yet to comment on the images in question.

Her fanbase had a lot to say on the matter, though, lashing out at legislators for not having more stringent policies surrounding AI’s use in place.

Others lambasted that this incident exposes a larger issue: “Yes the Taylor Swift AI pie is disgusting but so is the all the deepfake porn out there. … It needs to be regulated because it is done without consent,” one X user wrote.

I wish the public was horrified by AI porn without Taylor Swift being involved, but the disgusting thing is this could be the only thing that moves the needle and and gets the ball rolling on a crackdown. Don't ever tell me she doesn't have power to spur change.

— darling (@unlikelydarling) January 25, 2024

“I wish the public was horrified by AI porn without Taylor Swift being involved,” another said. “The disgusting thing is this could be the only thing that moves the needle and and gets the ball rolling on a crackdown. Don’t ever tell me she doesn’t have power to spur change.”

It’s unclear what type of legislation could be brought against @FloridaPigMan, as regulations around AI vary state to state, or if Swift would sue Celeb Jihad, X or the user behind the X account.

Swift previously threatened legal action against Celeb Jihad back in 2011 after it shared another fake n:u:d:e image, but nothing ever came of it as the site claimed it’s a “satire” rather than a pornographic website — as well as disclaimers that it respects A-listers’ intellectual property, according to the Daily Mail.

The songstress’ loyal legion of Swifties said the incident sheds light on a larger issue surrounding deepfakes, as women — famous or not — have fallen victim to the scheme.DeepFake

Nonconsensual deepfake pornography has also been made illegal in Texas, Minnesota, New York, Hawaii and Georgia, though it hasn’t been successful in stopping the circulation of AI-generated n:u:d:e images at high schools in New Jersey and Florida, where explicit deepfake images of female students were circulated by male mates.

Last week, Rep. Joseph Morelle (D-NY) and Tom Kean (R-NJ) reintroduced a bill that would make the nonconsensual sharing of digitally altered pornographic images a federal crime, with imposable penalties like jail time, a fine or both.

The “Preventing Deepfakes of Intimate Images Act” was referred to the House Committee on the Judiciary, but the committee has yet to make a decision on whether or not to pass the bill.

Aside from making the sharing of digitally-altered intimate images a criminal offense, Morelle and Kean’s proposed legislation also would allow victims to sue offenders in civil court.